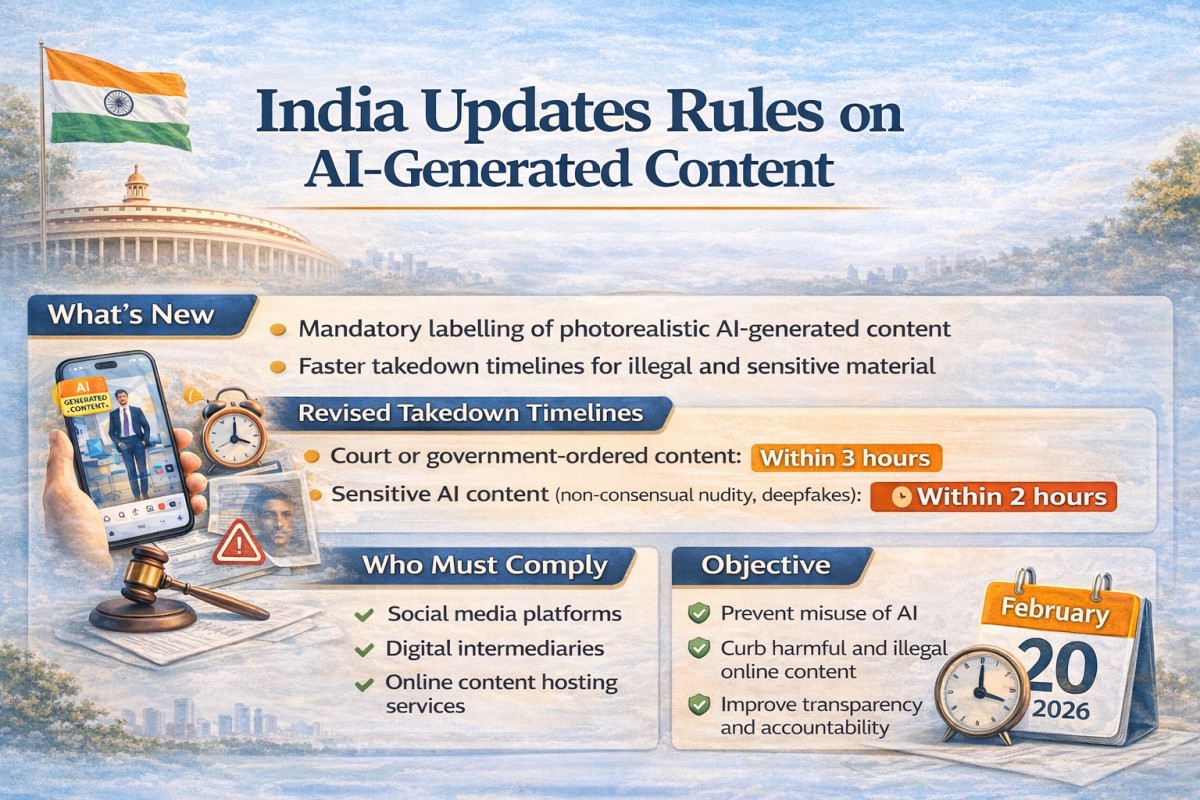

February 10, 2026, New Delhi : The Ministry of Electronics and Information Technology has notified amendments mandating clear labelling of photorealistic AI-generated content and significantly reducing the time social media platforms are allowed to remove unlawful and sensitive material.

Under the revised framework, digital platforms must comply with government or court orders within three hours, a sharp reduction from the earlier 24–36 hour window. Content deemed illegal by a court or an appropriate government authority will have to be taken down within this timeframe.

In cases involving sensitive material, including non-consensual nudity and deepfakes, platforms will be required to act even faster, with such content mandated to be removed within two hours.

The new requirements, issued under the Information Technology Act, 2021, also make it compulsory for intermediaries to prominently disclose when content has been generated or altered using artificial intelligence, particularly when it is photorealistic or capable of misleading users.

Officials said the amendments are intended to curb the rapid spread of illegal and harmful online content while improving transparency around synthetic media. Platforms have been cautioned that failure to meet the prescribed timelines could result in the loss of legal safe harbour protections.

The amended rules are scheduled to come into force from February 20, 2026, giving digital intermediaries a limited window to update their systems and compliance mechanisms.